Anthropic CEO Dario Amodei said large language model personality is starting to matter, argued costs to train models will come down and agents that act autonomously will need more scale and reliability.

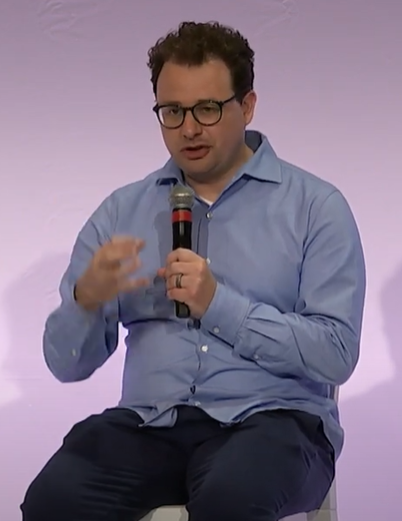

Those were some of the takeaways from Amodei, who spoke at Google Cloud Next.

Those were some of the takeaways from Amodei, who spoke at Google Cloud Next.

- Google Cloud Next 2024: Customer Interviews

- Google Cloud Next: The role of genAI agents, enterprise use cases

- Google Cloud Next 2024: Google Cloud aims to be data, AI platform of choice

- Google Cloud customers we’ve covered include: Uber, Home Depot, Equifax and Wayfair.

Model personalities will start to matter. Amodei covered the launch of Claude 3 and said a lot of effort was put into making the large language model personable. He said:

"One thing that we worked particularly hard on was the personality of the model. We've had this kind of chat paradigm of models for a while, but how engaging the model was hasn't had as much attention as reasoning capabilities. How much does it sound like a human? How warm and natural is it to talk to? We had an entire team devoted to making sure that Claude 3 is engaging."

Models need families. Amodei said the strategy for Claude 3 was to create a family of models. "Opus is the largest one. Sonnet is the smaller one, but faster and cheaper. Haiku is very fast and very cheap," he said. "Enterprises have different needs. Opus is very good at performing difficult tasks where you have to do exact calculations and those calculations have to be accurate. Sonnet is the workhorse model in the middle. I'm excited about Haiku because it outperforms almost all of its intelligence class while being fast and cheap."

More Anthropic: AWS ups its investment in Anthropic as giants form spheres of LLM influence | Constellation ShortList™ Cloud AI Developer Services |

Costs of training and inference. Amodei said costs for training and inference are coming down and will continue to fall, but more will be spent on training models. He said:

"I think the cost of training a particular model is going to go down very drastically but the models are so economically valuable that the amount of money that's spent on training is going to continue growing exponentially. We'll eat up all the efficiency gains at least at the higher end of models. Within Anthropic we measure things in units we call effective compute. I think that is going to go up 10x per year. That can't last forever, and no one knows for sure how long it'll last, but that's where we are right now."

How LLMs will develop over next few years. Amodei said model intelligence will come from pure scale. Future reliability and ability to handle specialized tasks will come from more scale and multi-modality with images, video and audio inputs. There will also be interactions with the physical world, maybe even robotics.

Hallucinations will also be a key challenge. "We have substantial teams to reduce the amount of hallucination at the present in models," said Amodei.

"The final thing I expect to see in the next year or two is agents models acting in the world," he added. "We've seen lots of instantiations of agents so far, but we haven't seen anything yet."

Enterprise use cases. Amodei said as models get smarter and trained for longer, they become much better at coding tasks. Healthcare and biomedicine will also be key use cases as well as finance and legal uses. "These use cases often involve reading long documents which Claude 3 has gotten better at relative to previous models," said Amodei.

Corporate use cases appear to be split evenly between creating internal tools to make employees more productive and customer facing uses. Consumer-facing companies will enable users to do more sophisticated tasks by coupling APIs.

Amodei said the cost of models for these use cases will become less of an issue since they'll be right sized for the task at hand.

The importance of prompt engineering. Amodei said enterprises should spend time with prompt engineers to test models and make sure they work as expected.

He said:

"We are still trying to figure out how our own models work. A large language model is very complicated object. When we deploy it, there's no way for us to figure out everything that it's capable of ahead of time. One of the most important things we do is just providing good prompt engineering support. It sounds simple, but 30 minutes with the prompt engineer can often make an application work when it wasn't before, or get better at handling errors.

I always recommend to an enterprise customer just meet with one of our prompt engineers for half an hour. It might completely transform your use case. There's a big difference between demos and actual deployment."

Safety and reliability. Anthropic recently published a paper on jailbreaking models. Amodei also said partnerships with Google Cloud revolve around security and reliability. Enterprises need both reliability and security to scale deployments of generative AI.

Amodei said short term concerns for models revolve around bias and misleading answers when important decisions need to be made in industries like finance, insurance, credit and legal. Overall, Amodei said his concern is how models will become increasingly powerful. He said:

"I think it's going to be possible for folks to misuse models. I worry about misuse of biology. I worry about cyberattacks. We have something called a responsible scaling plan, that's designed to detect those threats which honestly are not really very present today. We're only starting to see the beginning of them. So, every time we release a new model, we run we run them through this. We run tests to see if we are getting any closer to the world where we would be worried about these risks being present in models. And so far, the answer has always been no, but they're a little bit better at these tasks than they were before. Someday, the answer will be yes. And then we have a prescribed set of safety procedures that we'll take on the model. When that is the case, the other side of the risks is as models become more autonomous."

When models become agents and more autonomous, they can take actions without humans overseeing them. "I think there will be very substantial risks in this area, and we'll have to have policies. We'll have to mitigate them," said Amodei, who noted that enterprises will ask about those concerns as much as they do data privacy and hallucinations today.

Large custom models. Amodei said enterprises won't be in a position in the future of choosing a small custom model or a large general one. The correct fit will be a large custom model. LLMs will be customized for biology, finance and other industries.

What needs to happen beyond LLMs to create agents that take actions on your behalf? Amodei said "it's kind of an unexplored frontier." He said:

"One of my guesses is that if you want an agent to act in the world it requires the model to engage in a series of actions. You talk to a chat bot, it only answers and maybe there's a little follow-up. With agents you might need to take a bunch of actions, see what happens in the world or with a human and then take more actions. You need to do a long sequence of things and the error rate on each of the individual things has to be pretty low. There are probably thousands of actions that go into that. Models need to get more reliable because the individual steps need to have very low error rates. Part of that will come from scale. We need another generation or two of scale before the agents will really work."