AMD launched its Instinct MI300X processor, generative AI model collaborations and software plans. After all, Nvidia can't have all the generative AI infrastructure fun, right?

CEO Lisa Su, speaking at AMD's Data Center and AI Technology Premiere, outlined the company's roadmap to gain more artificial intelligence and data center workloads.

While Instinct MI300X was the headliner, AMD laid out a broad plan to not only take share from Intel but take on Nvidia in GPUs. Cloud providers and companies are looking to upgrade and consolidate infrastructure as they look to roll out generative AI. "Generative AI and LLMs have changed the landscape," said Su. "At the center of this are GPUs."

- How AI workloads will reshape data center demand | Goldman Sachs CIO Marco Argenti on AI, data, mental models for disruption

Su outlined a family of Instinct processors with chiplets that can be swapped out to optimize workloads led by the MI300X, which is designed for generative AI. AMD launched the MI300A at CES and it is sampling now.

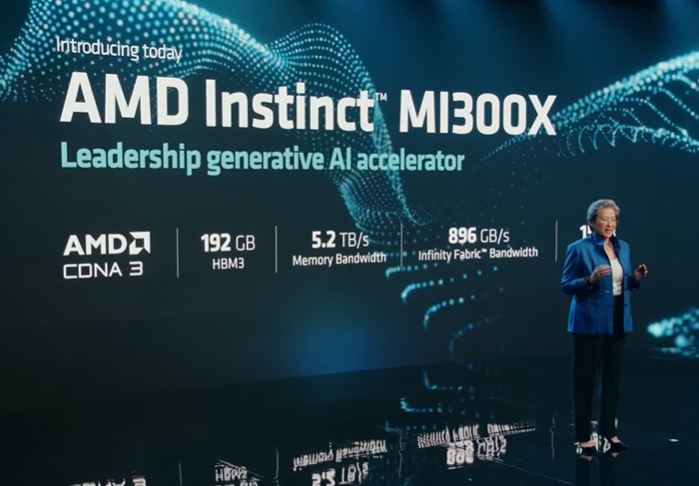

AMD said MI300X has an advantage by running models directly in memory to speed up training. AMD demoed the MI300X running the Falcon LLM in real time. Su said more memory and memory bandwidth means you can run more inference jobs per GPU than before.

The MI300X is based on the next-gen AMD CDNA 3 accelerator architecture and supports up to 192 GB of HBM3 memory.

The MI300X will come with the AMD Instinct Platform, which will include 8 MI300X processors and 1.5 TB in an industry-standard server infrastructure. That last point is key since AMD is making a case that its platform will lower the total cost of ownership for generative AI workloads.

Su said AI workloads are AMD's most promising market. Su made the case that its AI platform has an ecosystem of processors, software and partnerships that can make gains.

"There's no question that AI will be the key driver for silicon consumption for the foreseeable future," said Su. "We are still early in the life cycle of AI, but when we try and size it, we think the TAM can go from $30 billion this year to $150 billion in 2027."

Victor Peng, AMD President, said the company is growing its software ecosystem and development. "While this is a journey, we've made progress with platforms in deployments today," said Peng, who walked through AI runtimes, libraries and compilers and tools and collaborations with PyTorch and Hugging Face.

- Greystone CIO Niraj Patel on generative AI, creating value, managing vendors

- Accenture's Paul Daugherty: Generative AI today, but watch what's next

- Box CEO Levie on generative AI, productivity and platform neutrality

AMD Momentum with CPUs

Su said EPYC is growing share in the enterprise and among cloud providers and the 4th generation "Genoa" EPYC processor is ramping up in the data center. AMD's Su noted that GPUs are critical to AI workloads, but CPUs including EPYC account for the compute behind training models in many cases.

"Data center workloads are becoming increasingly specialized," said Su. AMD's argument is that data centers will increasingly be optimized for workloads instead of focusing on general-purpose use cases. Optimized workloads will need to combine the chipmaker's CPUs, GPUs, programmable processors and networking.

As generative AI takes root, enterprise customers are likely to use the cloud for some workloads with owned data centers for sensitive data. Citadel Securities outlined how the company has data center capacity leveraging AMD for research and low-latency trading.

AMD outlined Bergamo, a 4th Gen AMD EPYC processor designed for cloud-native workloads with up to 128 Zen 4c cores designed for density and energy efficiency. Bergamo has a 35% smaller footprint with better performance per watt with full software compatibility. Bergamo is shipping in volume to hyperscale providers.

The chip giant also launched Genoa-X, new 4th Gen EPYC processors focused on technical computing workloads for simulations and product design. AMD launched the second generation of AMD 3D V-Cache Technology that will be used for Genoa-X. Genoa-X will have four SKUS based on cores with systems from Dell Technologies, Lenovo and HPE on deck.

AMD highlighted the P4 DPU architecture, which optimizes data flows and is programmable so it can simplify management and offload CPU resources. AMD's Pensando P4 DPUs are being used in switches in current workloads as well as new designs. AMD said it will bring the Smart Switch to the enterprise for edge computing applications in retail, telco and smart city.

Hyperscale Cloud Deployments

AMD also featured a bevy of partners during its keynote. Among the highlights:

- AWS will build new EC2 instances with AMD. AWS will use 4th Gen EPYC processors Ec2 M7a instances, which will have 50% better performance than the previous generation. The instances will be in preview today. AWS will roll out more EPYC Ec2 instances over time.

- Oracle announced new E5 instances with 4th generation EPYC.

- Meta is optimizing EPYC for its infrastructure with plans to optimize its server designs for Genoa and Bergamo, which will be used for generative workloads. Meta is using AMD-based servers for storage, transcoding and scale-out deployments.

- Microsoft Azure will offer high-performance computing instances based on Genoa-X. Instances are available with AMD's 3D-Cache technology.