MongoDB launched new Atlas features and integrations with Microsoft Azure, Google Cloud and Amazon Web Services as well as an expanded partner program. The effort, announced at MongoDB.local NYC, is designed to make it easier for developers to scale MongoDB applications across clouds and edge infrastructure.

The company's strategy revolves around flexibility and accessing data across multiple locations, said Scott Sanchez, Vice President of Marketing at MongoDB.

"Not every AI model is going to be in every file, or every region and some workloads may only be possible in a specific location or specific hyperscaler," said Sanchez. "So, flexibility really matters. Data comes from all these different places and sources and formats, and being able to put that in one place and represented in a single document-based model is crucial in this AI world."

Here's the breakdown of what was announced at MongoDB.local NYC, which will also include an investor session with Wall Street analysts.

MongoDB Atlas: Stream Processing GA, Search Nodes on Azure, Edge Server

MongoDB outlined new features in MongoDB Atlas and the general availability of MongoDB Atlas Stream Processing.

The company said the new capabilities are designed to make it easier to build, deploy and scale data applications and services. Sahir Azam, Chief Product Officer at MongoDB, said Atlas is targeting optimization and reducing costs as well as building applications.

For instance, MongoDB Atlas Stream Processing will give developers the ability to leverage data in motion and at rest to power applications for Internet of things devices, inventory feeds and browsing behavior.

- MongoDB Q1, fiscal year outlook light, but eyes stable workload gains

- MongoDB steps up generative AI rollout across platform | MongoDB launches Atlas Vector Search, Atlas Stream Processing to enable AI, LLM workloads

MongoDB also said Atlas Search Nodes is available on Microsoft Azure in a move that combines the company's data platform with Azure generative AI workloads and services. Enterprises will be able to use Atlas Vector Search and Atlas Search on Azure to optimize generative AI applications.

Atlas Search Nodes on Azure will put the service on all three hyperscalers. MongoDB Search Nodes is available on AWS and Google Cloud already.

And MongoDB Atlas Edge Server was launched so customers can run distributed applications closer to end users. AI workloads are often moving to the edge for lower latency as well as proximity to data.

Atlas Edge Server, available in public preview, is a locale instance that can synchronize data when connectivity is spotty, supports data tiering and maintains a local data layer for low latency.

"MongoDB advances its capabilities with key AI additions to its scope. Database support for AI is key for enterprises to keep using databases – without having to export data to a third party AI data platforms," said Constellation Research analyst Holger Mueller. "This makes MongoDB even more attractive to power next generation applications for an enterprise and combined with multi-cloud capabilities is compelling."

MongoDB AI Applications Program

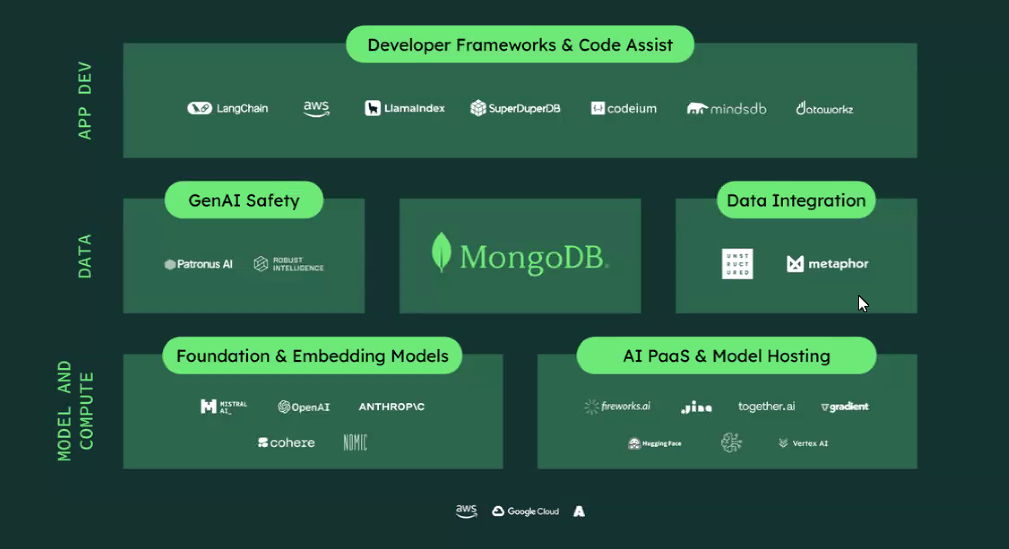

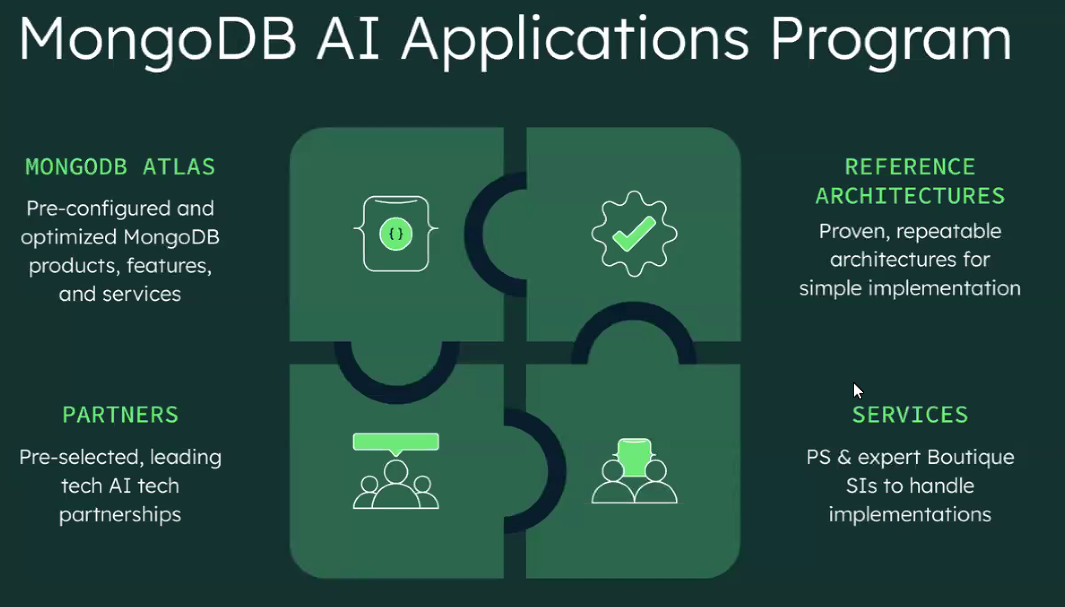

MongoDB launched the MongoDB AI Applications Program, or MAAP, that includes a bevy of technology partners, foundational models and advisory services to enable enterprises to deploy generative AI applications faster.

The goal of MAAP is to create an integrated stack from MongoDB and partners including AWS, Google Cloud, Microsoft Azure that will feature frameworks and models from Anthropic, Cohere, LlamaIndex, LangChain and a host of others. The stack feature MongoDB Atlas and aim to be turnkey for enterprises looking to build genAI apps.

MAAP includes:

- Strategies and roadmaps for genAI applications and support services via MongoDB Professional Services and consulting partners.

- A curated selection of foundational models for multiple use cases from Anthropic, Cohere, Meta, Mistral, OpenAI and others.

- Reference architecture, integration technology and prescriptive guidance.

- AI jump-start sessions with industry experts.

MongoDB Atlas Vector Search integration with Amazon Bedrock

MongoDB said it has integrated Atlas Vector Search Knowledge Bases with Amazon Bedrock. MongoDB Atlas Vector Search on Knowledge Bases for Amazon Bedrock gives enterprises the ability to build genAI apps using managed foundation models.

The combination between MongoDB Atlas Vector Search with Amazon Bedrock is designed for a bevy of joint customers between the two companies.

According to MongoDB, customers can use the Atlas Vector Search and Bedrock integration to customize large language models from a bevy of providers with real-time data that's converted into vector embeddings by MongoDB.

MongoDB, Google Cloud to optimize Gemini Code Assist for MongoDB developers

MongoDB and Google Cloud said the companies will optimize Gemini Code Assist with MongoDB suggestions, answers and code.

Gemini Code Assist will give developers information, documentation and best practices for MongoDB code.

MongoDB said that Gemini Code Assist is trained on publicly available datasets with full codebase awareness and integration with various code editors and repositories.

The two companies said the optimization for Gemini Code Assist and MongoDB will be available in the "coming months."

Constellation Research's take

Mueller said MongoDB is focusing on what CxOs care about. He said:

"It's good to see MongoDB not only covering the GenAI basics with the usual vector announcement that has become staple for all database vendors – but also focusing what really matters to CxO. What matters to CxOs is building next generation applications that fuel Enterprise Acceleration. The ability to build multicloud applications that can execute code all the way to the edge is critical. And good to see the multicloud strategy also in the AI announcements by partnering with the relative best that the cloud platforms have to offer – Bedrock for AWS and Gemini for Google Cloud."