Foundational model debates--large language models, small language models, orchestration, enterprise data and choices--are surfacing in ongoing enterprise buyer discussions. The challenge: You may need a crystal ball and architecture savvy to avoid previous mistakes such as lock-in.

In recent days, we have seen the following:

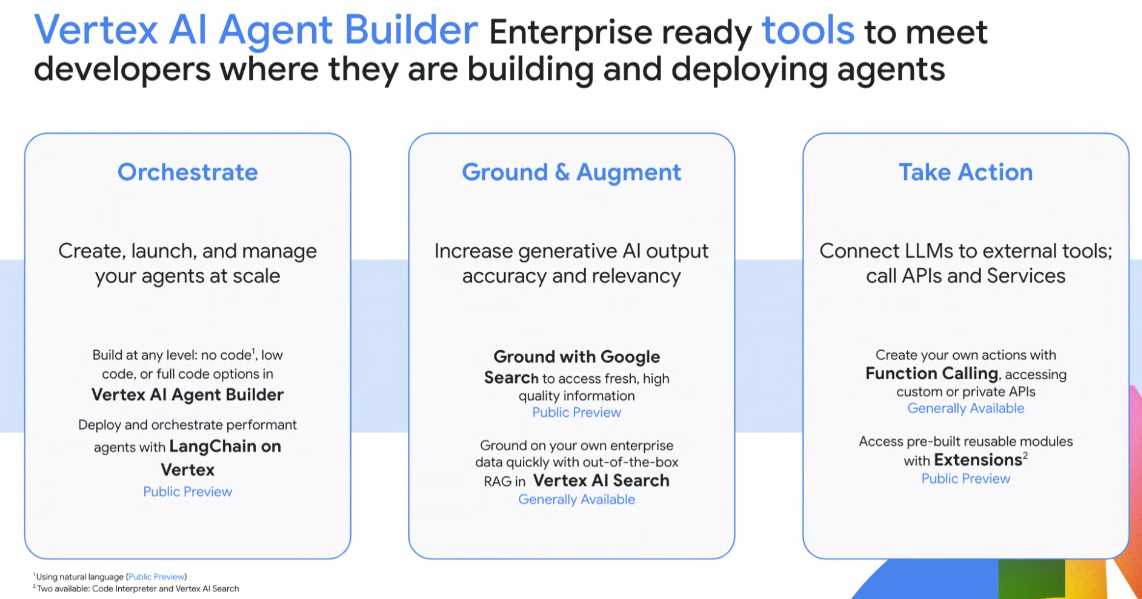

- Google Cloud Next outlined how it is deploying Gemini 1.5 Pro across multiple use cases and business roles. The gist is that Google Cloud wants to use Vertex AI to be the model orchestration platform to build a bevy of generative AI agents. Google Cloud's Model Garden serves up more than 130 model choices, but there's no question that Gemini is the star. "We are now building generative AI agents," said Google Cloud CEO Thomas Kurian. "Agents are intelligent entities that take action to help you achieve specific goals."

- AWS highlighted its thoughts on open model choices and leveraging Bedrock combined with Q to be the generative AI platform of choice. AWS has the Titan model, but emphasizes model choices and is positioned to be the Switzerland of LLMs.

- Constellation Research's latest BT150 CXO call revolved around small models vs. large ones. Vendors such as ServiceNow have championed smaller use-case specific models for efficiency and costs.

- Anthropic CEO Dario Amodei said enterprises won't be in a position in the future of choosing a small custom model or a large general one. The correct fit will be a large custom model. LLMs will be customized for biology, finance and other industries.

- Archetype AI raised $13 million in seed funding and launched its Newton physical world foundational model. Newton is built to understand the physical world and data signals from a range of environmental sensors. Newton highlights how LLMs are being aimed at green field opportunities.

- For good measure, Microsoft will likely talk about building customized Copilots and model choices at Build in May. A few sessions on Azure AI Studio have highlighted orchestration.

Dion Hinchcliffe: Enterprises Must Now Cultivate a Capable and Diverse AI Model Garden

Now that's a lot to talk about considering how enterprises need to plow ahead with generative AI, leverage proprietary data and pick a still-in-progress model orchestration layer without being boxed in. The dream is that enterprises will be able to swap models as they improve. The reality is that swapping models may be challenging without the right architecture.

This post first appeared in the Constellation Insight newsletter, which features bespoke content weekly and is brought to you by Hitachi Vantara.

Will enterprise software vendors use proprietary models to lock you in? Possibly. There is nothing in enterprise vendor history that would indicate they won't try to lock you in.

The crystal ball says that models are likely to be commoditized at some point. There will be a time where good enough is fine as enterprises toggle between cost, speed and accuracy. Models will be like compute instances where enterprises can simply swap them as needed.

It's too early to say that LLMs will go commodity, but there's no reason to think they won't. Should that commoditization occur platforms that can create, manage and orchestrate models will win. However, there is a boom market for models for the foreseeable future.

AWS Vice President of AI Matt Wood noted that foundation models today are "disproportionately important because things are moving so quickly." Wood said: "It's important early on with these technologies to have that choice, because nobody knows how these models are going to be used and where their sweet spot is."

Wood said that LLMs will be sustainable because they're going to be trained in terms of cost, speed and power efficiency. These models will then be stacked to create advantage.

Will these various models become a commodity?

"I think foundational models are very unlikely to get commoditized because I think that there's just there is so much utility for generative AI. There's so much opportunity," said Wood, who noted that LLMs that initially boil the AI ocean are being split into prices and sizes. "You're starting to see divergence in terms of price per capability. We're talking about task models; I can see industry focus models; I can see vertically focused models; models for RAG. There's just so much utility and that's just the baseline for where we're at today."

He added:

"I doubt these models are going to become commoditized because we haven't yet built a set of criteria that helps customers evaluate models, which is well understood and broadly distributed. If you're choosing a compute instance, you can look at the amount of memory, the number of CPUs, the number of cores and networking. You can make some determination of how that will be useful to you."

In the meantime, your architecture needs to ensure that you aren't boxed in as models leapfrog each other in capabilities. Rapid advances in LLMs mean that you’ll need to hedge your bets.

Constellation Research analyst Holger Mueller noted:

"We are only at the beginning of the foundation model era. The layering of public LLMs that are up to speed on real world developments and logically merged with industry knowledge, SaaS packaging, and functional enterprise domain specific models are going to be crucial for gen AI success. Bridging the gap from real world aware and fitting an enterprise makes genAI workable and effective."